In the rapidly evolving world of artificial intelligence, few voices resonate as clearly and with as much foresight as Charlene Li. A New York Times bestselling author and founder of Quantum Networks Group. Li has long been recognized for her leadership, technology, and transformation expertise.

With a career spanning more than three decades of guiding businesses through digital disruption—from the rise of the internet to the emergence of social media—she now turns her attention to perhaps the most consequential shift yet: the rise of AI and its ethical application in marketing.

In a recent conversation about her forthcoming book, Winning with AI, co-authored with Katja Wells, Li shared a framework for ethical AI implementation rooted not in technical know-how alone, but in organizational leadership and values. Her insights make it clear that success with AI will depend on innovation and integrity.

TL;DR: Key Insights from the Charlene Li Interview

AI strategy is a leadership issue, not just a technical challenge.

Trust is foundational to ethical AI use with customers and internal stakeholders.

Leaders can build trust using the AI Trust Pyramid.

Organizations should form a cross-functional AI council to align AI use with company values.

Ethical concerns include synthetic data, transparency, and workforce impact.

The biggest risk? Inaction due to fear—marketers must model responsible, imaginative AI use.

The future of AI in marketing lies in trusted autonomous systems, guided by clear ethical standards.

Leadership Is the Differentiator in Ethical AI Adoption

Li emphasizes that effective AI adoption is fundamentally a leadership challenge. According to Li, the power of AI cannot be unlocked without the proper cultural and leadership foundation.

“After watching digital change for over 30 years, I’ve learned it’s never about the technology,” she explains. “It’s always about the people.”

Organizations that fail to align AI deployment with leadership values and company culture risk missing the ethical mark and the opportunity to lead.

She notes that this alignment becomes even more critical as AI's scale and impact rival those of the internet’s earliest days. Yet, unlike previous technological waves, AI introduces far more frequent and profound ethical complexities.

“After watching digital change for over 30 years, I’ve learned it’s never about the technology. It’s always about the people.”

Why AI Raises Deeper Ethical Questions

Li likens the current AI moment to the creation of the internet in terms of ubiquity and impact, but says AI raises the stakes.

“This is fundamentally changing the relationship between us as humans and technology,” she observes. “It becomes an existential question—who are we as humans when the technology can do it better than we can?”

That shift introduces new ethical tensions, especially for marketers. From synthetic data used in virtual focus groups to hyper-personalized campaigns, AI’s capabilities force a reconsideration of long-standing practices. Li’s central message is that ethical decision-making begins with clearly articulated values.

“There’s no one right answer for how transparent to be about using AI,” she says. “It depends on what kind of relationship you want with your customers—and that depends on your values.”

Building Trust with the AI Trust Pyramid

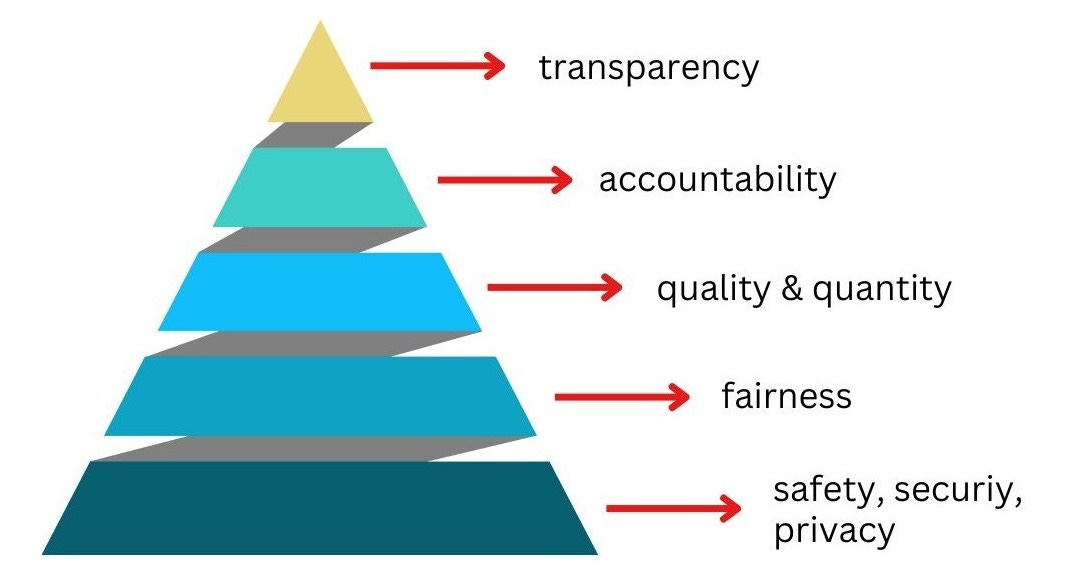

To help organizations operationalize these values, Li developed the AI Trust Pyramid, modeled after Maslow’s hierarchy of needs. The framework identifies five ascending layers of trust:

Safety, Security, and Privacy – Establishing a secure foundation.

Fairness – Defining what equitable outcomes look like within the organization.

Quality and Accuracy – Ensuring reliability and consistency in AI outputs.

Responsibility and Accountability – Assigning human oversight and ownership.

Transparency – Communicating clearly about how and why AI is used.

Each level builds upon the previous one. Fairness and quality cannot be guaranteed without a secure and private foundation. And without transparency, trust remains elusive.

The AI Council: A Minimum Viable Team for Ethical Oversight

Li advocates for a cross-functional AI council to align AI initiatives with business goals and ethical standards. Far from a bureaucratic committee, this “minimum viable team” (MVTA) consists of four to five representatives who collectively shape organizational AI strategy.

The core team typically includes leaders from business strategy, digital or AI functions, customer or commercial perspectives, and human resources. While they gather input from across departments, their small size ensures agility and focus.

“The AI council isn’t about representation from every function,” Li explains. “It’s about aligning AI use with company values and ensuring customer and employee interests are at the table.”

Ethical Trade-offs: Efficiency vs. Integrity

One of the most pressing ethical questions in AI adoption is whether gains in efficiency come at the cost of jobs. Li acknowledges this concern but notes that the reality is often more nuanced.

Rather than replacing workers outright, many organizations use AI to augment performance, especially among underperforming staff. One example Li shares involves a call center that used AI to raise the capabilities of its bottom 25% of performers. This freed up capacity to pursue deeper customer engagement and even enter new business areas, such as AI-powered legal services.

“The goal was never to eliminate jobs,” Li says. “It was to create new value, and that starts with being transparent about your intentions.”

This theme recurs throughout her framework: ethical implementation means proactively communicating goals, even if some roles inevitably evolve or disappear. Transparency builds trust and allows employees to envision their place in an AI-enhanced future.

A Call for Imaginative, Responsible Use

Perhaps Li’s most counterintuitive insight is that inaction can be an ethical failure. She believes the greatest risk marketers face isn’t misusing AI—it’s being so afraid of doing the wrong thing that they do nothing at all.

“The biggest risk is not being imaginative enough,” she asserts. “When marketers hesitate, bad actors fill the void. What we need are strong, positive use cases that raise the bar for everyone else.”

Rather than focusing exclusively on banning bad uses, Li recommends defining and sharing examples of responsible use. This enables best practices to emerge and sets expectations that others can follow.

“The biggest risk is not being imaginative enough … When marketers hesitate, bad actors fill the void. What we need are strong, positive use cases that raise the bar for everyone else.”

Toward Trusted Autonomy

Li foresees a future where autonomous AI systems handle increasing responsibilities, provided clearly defined ethical guidelines govern them. Ironically, machines may prove more consistent than humans in following these rules.

“If we can trust AI to follow the guidelines better than we can ourselves, we may no longer need humans in the loop for every decision,” she says. “That’s the ultimate unlock.”

But this outcome hinges on the trust pyramid being in place. Without security, fairness, accuracy, responsibility, and transparency, the promise of autonomous systems remains out of reach.

Conclusion

Charlene Li’s approach to ethical AI marketing is both pragmatic and visionary. She challenges leaders to ground their decisions in values, embrace cross-functional oversight, and model the ethical behavior they want the technology to reflect. Her guidance for marketers navigating the AI revolution is clear: trust is the foundation, and leadership is the lever.

Check Out Charlene’s Resources

Here are links to various content Charlene has produced that you’ll want to consider downloading and/or subscribing to:

Webinar: Unlocking the Power of Generative AI covers how to set up a secure AI playground and exercises to get you started.

Webinar: Developing a Winning Generative AI Strategy goes step-by-step on developing a generative AI strategy.

Winning with AI, her soon-to-be-published book provides a 90-day roadmap for companies seeking to integrate AI into their operations.

Coursera course: Navigating Generative AI: A CEO Playbook

LinkedIn Newsletter: Leading Disruption

Email newsletter: The Big Gulp Newsletter

Weekly live stream: Every Tuesday, 9 am PST- 12 pm EST

Speaking engagements: Send an email to speaking@charleneli.com

More at charleneli.com

In next week’s issue…

As businesses race to embrace generative AI, a common theme is emerging: everyone’s using it, but few are using it well. In next week’s issue, we address the problem, introducing a Maslow-style pyramid of our own making—the Generative AI Business Adoption Hierarchy—a framework for ethical business adoption. It outlines five progressive stages organizations go through as they integrate generative AI into their operations, culture, and strategy.

If you found this issue helpful, please forward it to someone who would benefit from the information. If you were forwarded this, please consider subscribing.

Share this post